As data becomes more abundant and information systems grow in complexity, stakeholders need solutions that reveal quality insights. Applying emerging technologies to the geospatial domain offers a unique opportunity to create transformative user experiences and intuitive workstreams for users and organizations to deliver on their missions and responsibilities.

In this post, we explore how you can integrate existing systems with Amazon Bedrock to create new workflows to unlock efficiencies insights. This integration can benefit technical, nontechnical, and leadership roles alike.

Introduction to geospatial data

Geospatial data is associated with a position relative to Earth (latitude, longitude, altitude). Numerical and structured geospatial data formats can be categorized as follows:

- Vector data – Geographical features, such as roads, buildings, or city boundaries, represented as points, lines, or polygons

- Raster data – Geographical information, such as satellite imagery, temperature, or elevation maps, represented as a grid of cells

- Tabular data – Location-based data, such as descriptions and metrics (average rainfall, population, ownership), represented in a table of rows and columns

Geospatial data sources might also contain natural language text elements for unstructured attributes and metadata for categorizing and describing the record in question. Geospatial Information Systems (GIS) provide a way to store, analyze, and display geospatial information. In GIS applications, this information is frequently presented with a map to visualize streets, buildings, and vegetation.

LLMs and Amazon Bedrock

Large language models (LLMs) are a subset of foundation models (FMs) that can transform input (usually text or image, depending on model modality) into outputs (generally text) through a process called generation. Amazon Bedrock is a comprehensive, secure, and flexible service for building generative AI applications and agents.

LLMs work in many generalized tasks involving natural language. Some common LLM use cases include:

- Summarization – Use a model to summarize text or a document.

- Q&A – Use a model to answer questions about data or facts from context provided during training or inference using Retrieval Augmented Generation (RAG).

- Reasoning – Use a model to provide chain of thought reasoning to assist a human with decision-making and hypothesis evaluation.

- Data generation – Use a model to generate synthetic data for testing simulations or hypothetical scenarios.

- Content generation – Use a model to draft a report from insights derived from an Amazon Bedrock knowledge base or a user’s prompt.

- AI agent and tool orchestration – Use a model to plan the invocation of other systems and processes. After other systems are invoked by an agent, the agent’s output can then be used as context for further LLM generation.

GIS can implement these capabilities to create value and improve user experiences. Benefits can include:

- Live decision-making – Taking real-time insights to support immediate decision-making, such as emergency response coordination and traffic management

- Research and analysis – In-depth analysis that humans or systems can identify, such as trend analysis, patterns and relationships, and environmental monitoring

- Planning – Using research and analysis for informed long-term decision-making, such as infrastructure development, resource allocation, and environmental regulation

Augmenting GIS and workflows with LLM capabilities leads to simpler analysis and exploration of data, discovery of new insights, and improved decision-making. Amazon Bedrock provides a way to host and invoke models as well as integrate the AI models with surrounding infrastructure, which we elaborate on in this post.

Combining GIS and AI through RAG and agentic workflows

LLMs are trained with large amounts of generalized information to discover patterns in how language is produced. To improve the performance of LLMs for specific use cases, approaches such as RAG and agentic workflows have been created. Retrieving policies and general knowledge for geospatial use cases can be accomplished with RAG, whereas calculating and analyzing GIS data would require an agentic workflow. In this section, we expand upon both RAG and agentic workflows in the context of geospatial use cases.

Retrieval Augmented Generation

With RAG, you can dynamically inject contextual information from a knowledge base during model invocation.

RAG supplements a user-provided prompt with data sourced from a knowledge base (collection of documents). Amazon Bedrock offers managed knowledge bases to data sources, such as Amazon Simple Storage Service (Amazon S3) and SharePoint, so you can provide supplemental information, such as city development plans, intelligence reports, or policies and regulations, when your AI assistant is generating a response for a user.

Knowledge bases are ideal for unstructured documents with information stored in natural language. When your AI model responds to a user with information sourced from RAG, it can provide references and citations to its source material. The following diagram shows how the systems connect together.

Because geospatial data is often structured and in a GIS, you can connect the GIS to the LLM using tools and agents instead of knowledge bases.

Tools and agents (to control a UI and a system)

Many LLMs, such as Anthropic’s Claude on Amazon Bedrock, make it possible to provide a description of tools available so your AI model can generate text to invoke external processes. These processes might retrieve live information, such as the current weather in a location or querying a structured data store, or might control external systems, such as starting a workflow or adding layers to a map. Some common geospatial functionality that you might want to integrate with your LLM using tools include:

- Performing mathematical calculations like the distance between coordinates, filtering datasets based on numeric values, or calculating derived fields

- Deriving information from predictive analysis models

- Looking up points of interest in structured data stores

- Searching content and metadata in unstructured data stores

- Retrieving real-time geospatial data, like traffic, directions, or estimated time to reach a destination

- Visualizing distances, points of interest, or paths

- Submitting work outputs such as analytic reports

- Starting workflows, like ordering supplies or adjusting supply chain

Tools are often implemented in AWS Lambda functions. Lambda runs code without the complexity and overhead of running servers. It handles the infrastructure management, enabling faster development, improved performance, enhanced security, and cost-efficiency.

Amazon Bedrock offers the feature Amazon Bedrock Agents to simplify the orchestration and integration with your geospatial tools. Amazon Bedrock agents follow instructions for LLM reasoning to break down a user prompt into smaller tasks and perform actions against identified tasks from action providers. The following diagram illustrates how Amazon Bedrock Agents works.

The following diagram shows how Amazon Bedrock Agents can enhance GIS solutions.

Solution overview

The following demonstration applies the concepts we’ve discussed to an earthquake analysis agent as an example. This example deploys an Amazon Bedrock agent with a knowledge base based on Amazon Redshift. The Redshift instance has two tables. One table is for earthquakes, which includes date, magnitude, latitude, and longitude. The second table holds the counites in California, described as polygon shapes. The geospatial capabilities of Amazon Redshift can relate these datasets to answer queries like which county had the most recent earthquake or which county has had the most earthquakes in the last 20 years. The Amazon Bedrock agent can generate these geospatially based queries based on natural language.

This script creates an end-to-end pipeline that performs the following steps:

- Processes geospatial data.

- Sets up cloud infrastructure.

- Loads and configures the spatial database.

- Creates an AI agent for spatial analysis.

In the following sections, we create this agent and test it out.

Prerequisites

To implement this approach, you must have an AWS account with the appropriate AWS Identity and Access Management (IAM) permissions for Amazon Bedrock, Amazon Redshift, and Amazon S3.

Additionally, complete the following steps to set up the AWS Command Line Interface (AWS CLI):

- Confirm you have access to the latest version of the AWS CLI.

- Sign in to the AWS CLI with your credentials.

- Make sure ./jq is installed. If not, use the following command:

Set up error handling

Use the following code for the initial setup and error handling:

This code performs the following functions:

- Creates a timestamped log file

- Sets up error trapping that captures line numbers

- Enables automatic script termination on errors

- Implements detailed logging of failures

Validate the AWS environment

Use the following code to validate the AWS environment:

This code performs the essential AWS setup verification:

- Checks AWS CLI installation

- Validates AWS credentials

- Retrieves account ID for resource naming

Set up Amazon Redshift and Amazon Bedrock variables

Use the following code to create Amazon Redshift and Amazon Bedrock variables:

Create IAM roles for Amazon Redshift and Amazon S3

Use the following code to set up IAM roles for Amazon S3 and Amazon Redshift:

Prepare the data and Amazon S3

Use the following code to prepare the data and Amazon S3 storage:

This code sets up data storage and retrieval through the following steps:

- Creates a unique S3 bucket

- Downloads earthquake and county boundary data

- Prepares for data transformation

Transform geospatial data

Use the following code to transform the geospatial data:

This code performs the following actions to convert the geospatial data formats:

- Transforms ESRI JSON to WKT format

- Processes county boundaries into CSV format

- Preserves spatial information for Amazon Redshift

Create a Redshift cluster

Use the following code to set up the Redshift cluster:

This code performs the following functions:

- Sets up a single-node cluster

- Configures networking and security

- Waits for cluster availability

Create a database schema

Use the following code to create the database schema:

This code performs the following functions:

- Creates a counties table with spatial data

- Creates an earthquakes table

- Configures appropriate data types

Create an Amazon Bedrock knowledge base

Use the following code to create a knowledge base:

This code performs the following functions:

- Creates an Amazon Bedrock knowledge base

- Sets up an Amazon Redshift data source

- Enables spatial queries

Create an Amazon Bedrock agent

Use the following code to create and configure an agent:

This code performs the following functions:

- Creates an Amazon Bedrock agent

- Associates the agent with the knowledge base

- Configures the AI model and instructions

Test the solution

Let’s observe the system behavior with the following natural language user inputs in the chat window.

Example 1: Summarization and Q&A

For this example, we use the prompt “Summarize which zones allow for building of an apartment.”

The LLM performs retrieval with a RAG approach, then uses the retrieved residential code documents as context to answer the user’s query in natural language.

This example demonstrates the LLM capabilities for hallucination mitigation, RAG, and summarization.

Example 2: Generate a draft report

Next, we input the prompt “Write me a report on how various zones and related housing data can be utilized to plan new housing development to meet high demand.”

The LLM retrieves relevant urban planning code documents, then summarizes the information into a standard reporting format as described in its system prompt.

This example demonstrates the LLM capabilities for prompt templates, RAG, and summarization.

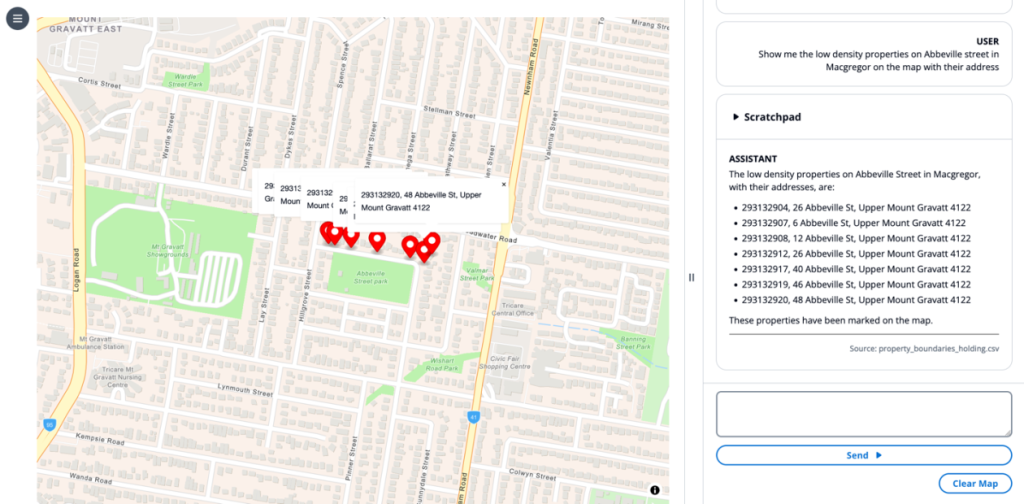

Example 3: Show places on the map

For this example, we use the prompt “Show me the low density properties on Abbeville street in Macgregor on the map with their address.”

The LLM creates a chain of thought to look up which properties match the user’s query and then invokes the draw marker tool on the map. The LLM provides tool invocation parameters in its scratchpad, awaits the completion of these tool invocations, then responds in natural language with a bulleted list of markers placed on the map.

This example demonstrates the LLM capabilities for chain of thought reasoning, tool use, retrieval systems using agents, and UI control.

Example 4: Use the UI as context

For this example, we choose a marker on a map and input the prompt “Can I build an apartment here.”

The “here” is not contextualized from conversation history but rather from the state of the map view. Having a state engine that can relay information from a frontend view to the LLM input adds a richer context.

The LLM understands the context of “here” based on the selected marker, performs retrieval to see the land development policy, and responds to the user in simple natural language, “No, and here is why…”

This example demonstrates the LLM capabilities for UI context, chain of thought reasoning, RAG, and tool use.

Example 5: UI context and UI control

Next, we choose a marker on the map and input the prompt “draw a .25 mile circle around here so I can visualize walking distance.”

The LLM invokes the draw circle tool to create a layer on the map centered at the selected marker, contextualized by “here.”

This example demonstrates the LLM capabilities for UI context, chain of thought reasoning, tool use, and UI control.

Clean up

To clean up your resources and prevent AWS charges from being incurred, complete the following steps:

- Delete the Amazon Bedrock knowledge base.

- Delete the Redshift cluster.

- Delete the S3 bucket.

Conclusion

The integration of LLMs with GIS creates intuitive systems that help users of different technical levels perform complex spatial analysis through natural language interactions. By using RAG and agent-based workflows, organizations can maintain data accuracy while seamlessly connecting AI models to their existing knowledge bases and structured data systems. Amazon Bedrock facilitates this convergence of AI and GIS technology by providing a robust platform for model invocation, knowledge retrieval, and system control, ultimately transforming how users visualize, analyze, and interact with geographical data.

For further exploration, Earth on AWS has videos and articles you can explore to understand how AWS is helping build GIS applications on the cloud.

About the Authors